Building on our Activity-based Modeling, Simulation and Learning (AMSL) methodology, we design frugal, interpretable, and explainable models and simulations for complex adaptive systems.

Towards brain efficiency

A forthcoming article in Nature Communications Engineering, available on arXiv:2501.10425, introduces Delay Neural Networks (DeNNs). They are the first spiking neural networks that rely purely on temporal coding: information is represented by when spikes occur, not how often. This temporal approach naturally leads to sparse activity, and our results show it can cut resource use dramatically — with energy consumption reduced by up to ~13× and the number of computations reduced by up to ~6× compared to strong spiking baselines, while keeping accuracy competitive. These gains were confirmed through analytical estimates on SpiNNaker, the neuromorphic hardware platform of the Human Brain Project.

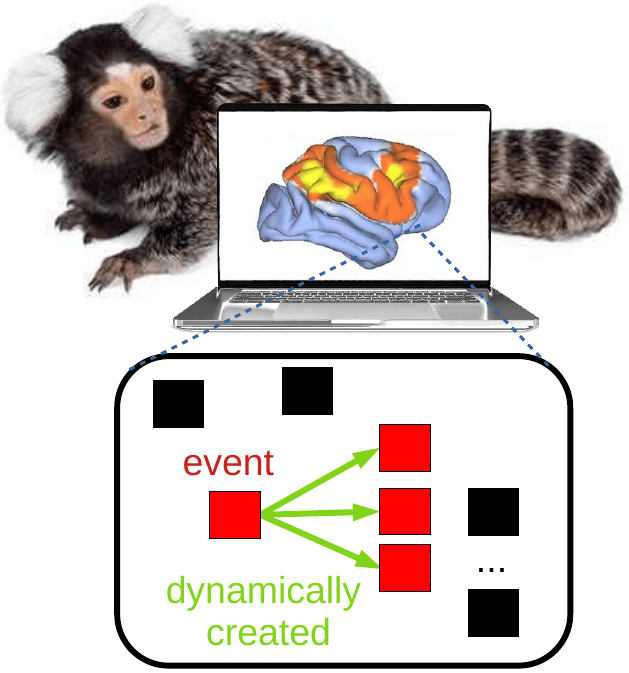

Frugal simulation of large neural networks

We published at published at MIT Press Neural Computation a new algorithm that takes advantage of the sparse activity of biological neurons.

In AI context, the real-time simulation of brains composed of billions of neurons is currently a major challenge in software and hardware engineering.

Thanks to our new algorithm, we are able to simulate neural networks of the size of small monkeys on a simple desktop computer, whereas usual supercomputers were usually required!

You can listen to the interview (in French) on France Culture national radio, or look at the article (in French) at the CNRS computer science institute.

For more information please refer to the full article.

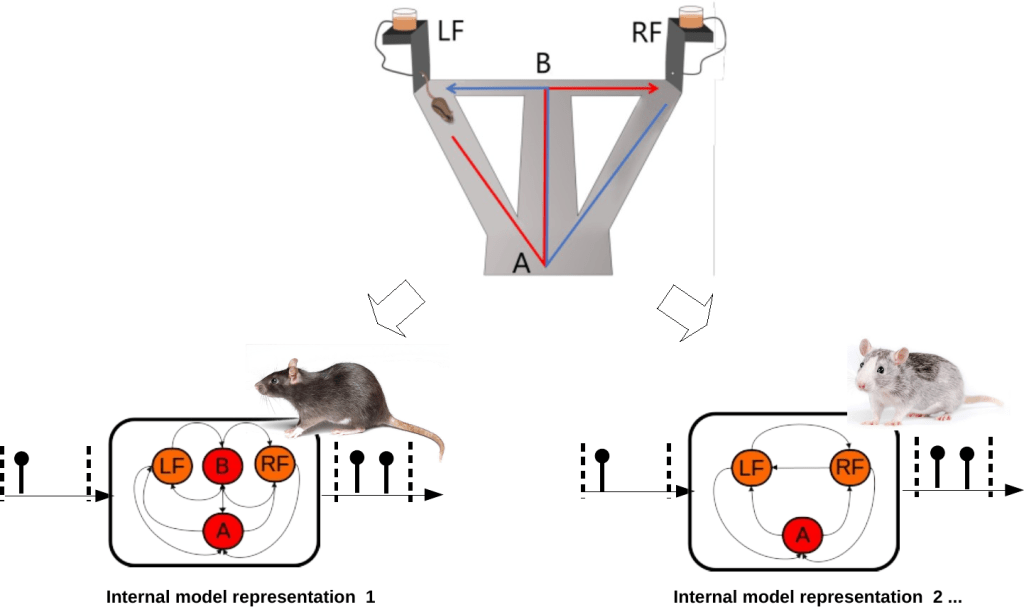

Cognitively-inspired structure learning to explain individual behaviors

Traditional reinforcement learning algorithms focus primarily on finding and carrying out the actions that maximize rewards. But real-world situations can be more tricky. We developed the new structure learning approach, where an agent’s internal structure consists of its learning rule and environment representation. Structure learning allows finding the various internal structures of agents, which better explain their non necessarily optimal behaviors. For example, using dynamic structure learning, we were able to identify the various dynamically changing strategies developed by rats in real maze learning experiments.

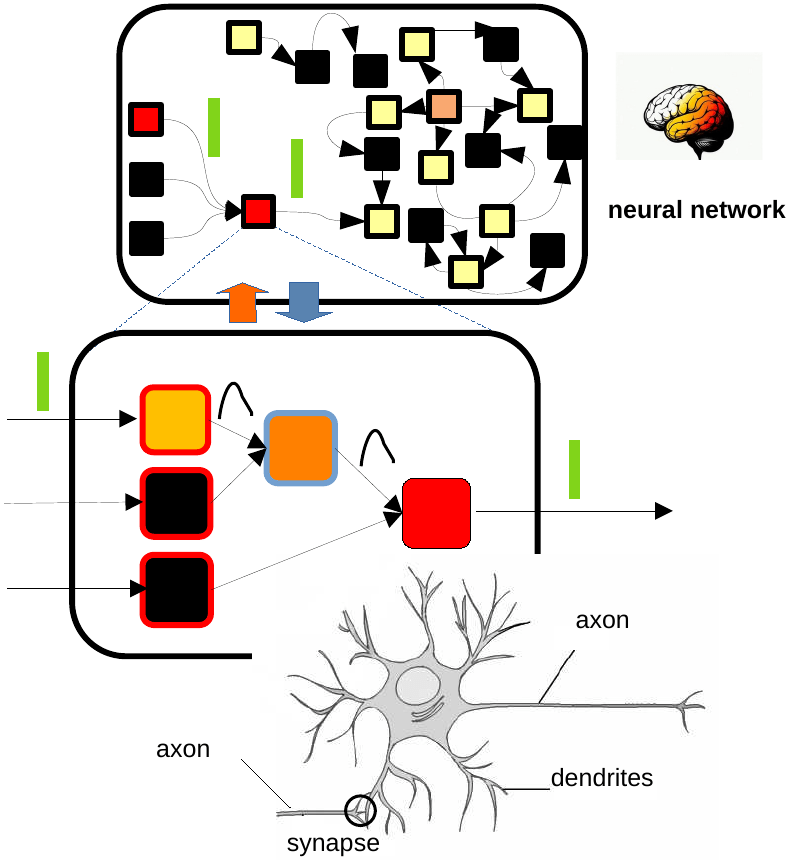

Abstraction of the complex topology of dendrites into delays

Neurons have large dendritic trees that are neglected in usual models. We showed that it would not be the dendritic topology of the neurons that explains the dynamics of the neuron, but rather the dendritic temporal properties of the neuron.

Frugal bio-inspired neural networks

Usual artificial neural network architectures are still far from biological ones. We are working to add bio-inspired properties to artificial neural networks. Like the brain, these new classes of bio-inspired networks are frugal in computations while acheiving good performances.

More publications here.